📝 Research summary: What yesterday's digital assistants can teach us about tomorrow

A new paper shows that even Alexa and Siri give some of us the ick

A new paper in the Journal of Consumer Behaviour is both an interesting look at our relationships with digital assistants and a testament to how ill-equipped the speed of modern academic social science is to keeping up with the pace of technological change we’re all living through. (The research was conducted in 2022 and the paper was just published in the peer-reviewed journal last week. Oof.) But the speed of academic research aside, the paper provides useful insights into our relationships with AI-powered digital assistants.

Importantly— and because the research was done before the mass adoption of LLM-powered assistants like ChatGPT, Claude, and Gemini— the term “AI-powered” in this case refers to the machine learning, natural language processing, and other technologies underlying previous-generation assistants like Siri, Alexa, and Google Assistant, and Samsung’s Bixby.

That cohort is, of course, already in its golden years. Let’s call them the Golden Girls, with no disrespect to queens Blanche, Sophia, Dorothy, and Rose. Google Assistant, bless her heart, still has a hard time remembering how to turn on NPR in my kitchen, and still hasn’t gotten over her love of plumbing the Spotify archives to share a cover, remix, or live version of a song instead of the original studio recorded version. And she gets even less capable as time goes on.

But I digress. What, exactly, did the researchers aim to study and understand about our relationships with the Golden Girls? In a word: creepiness.

Although research exists on users' experiences with DAs, less is known about how user characteristics influence perceptions of a ‘dark side’—perceived creepiness.

(Blanche, maybe. But the others? Creepy? Rude.)

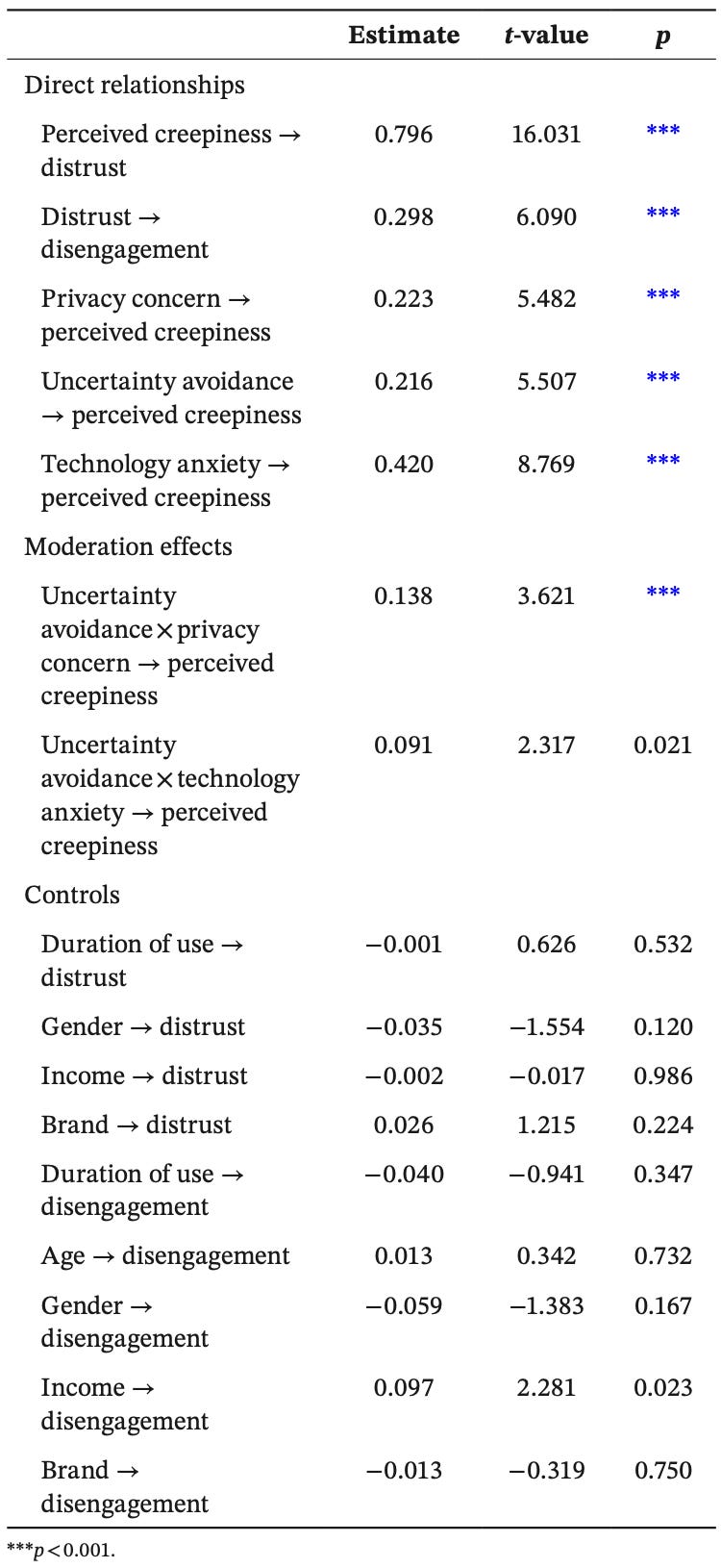

The researchers found evidence that three distinct sources of concern influence the extent to which we get creeped out by digital assistants:

Privacy concerns: worries about how personal information was being collected and used

Technology anxiety: general unease about interacting with AI systems

Uncertainty avoidance: a personal tendency to feel uncomfortable with ambiguous situations

Beyond this, they observed that when we find digital assistant technology creepy, we tend to distrust it, and when we distrust it, we tend to disengage with it.

In the authors’ words:

This study analyses the downsides (‘dark side’) of DAs by examining how user characteristics influence users' experiences. The proposed model demonstrates that privacy concerns, uncertainty avoidance, and technology anxiety have significant positive effects on perceived creepiness and distrust of DAs. This distrust leads to disengagement, where users are less likely to interact with DAs. Furthermore, the study reveals uncertainty avoidance acts as a moderator, strengthening the relationships privacy concerns and technology anxiety have with perceived creepiness. Interestingly, the study shows that these user characteristics (privacy concerns, uncertainty avoidance, and technology anxiety) indirectly influence distrust through perceived creepiness. Moreover, uncertainty avoidance moderates this indirect effect of privacy concerns on distrust. This means that for users with high uncertainty avoidance, privacy concerns have an even stronger influence on their feelings of creepiness, leading to greater distrust.

It’s a veritable domino-into-a-flywheel effect for the technology-skeptical: if you’re a person who tends to avoid uncertainty, you’re more likely to have privacy concerns, and if you have privacy concerns, you’re more likely to perceive technology like this as creepy, and if you consider it creepy, you’re more likely to just… avoid it altogether.

If this is how the more skeptical among us feel about our beloved Golden Girls, one can only imagine how those folks will feel about chatting with an Omniscient ScarJo.

Is this finding a bit tautological? Maybe. But nevertheless, it’s scientific evidence of an important truth as we start to see mass adoption of a new generation of exponentially more powerful digital assistants. As AI gets more powerful in the short term, the returns and benefits of using it will likely increase, yet the gap between adopters and non-adopters may also grow thanks in part to effects such as this one. It doesn’t seem unreasonable to me to imagine that human idiosyncrasies like this may contribute to a new digital divide— one that may make the one we worked hard to address with the internet seem quaint.

Thankfully, American society has collectively agreed that there’s a role for the federal government to play in addressing societal gaps like this, and we have a robust network of agencies staffed by our best and brightest conducting research and running programs to effectively address them!

Sigh.

As always, thanks for reading. I’ll try to dial down the snark next time.