📝 Research summary: User preferences and trust in AI voice assistants

Homophily, influence, and anthropomorphism, oh my!

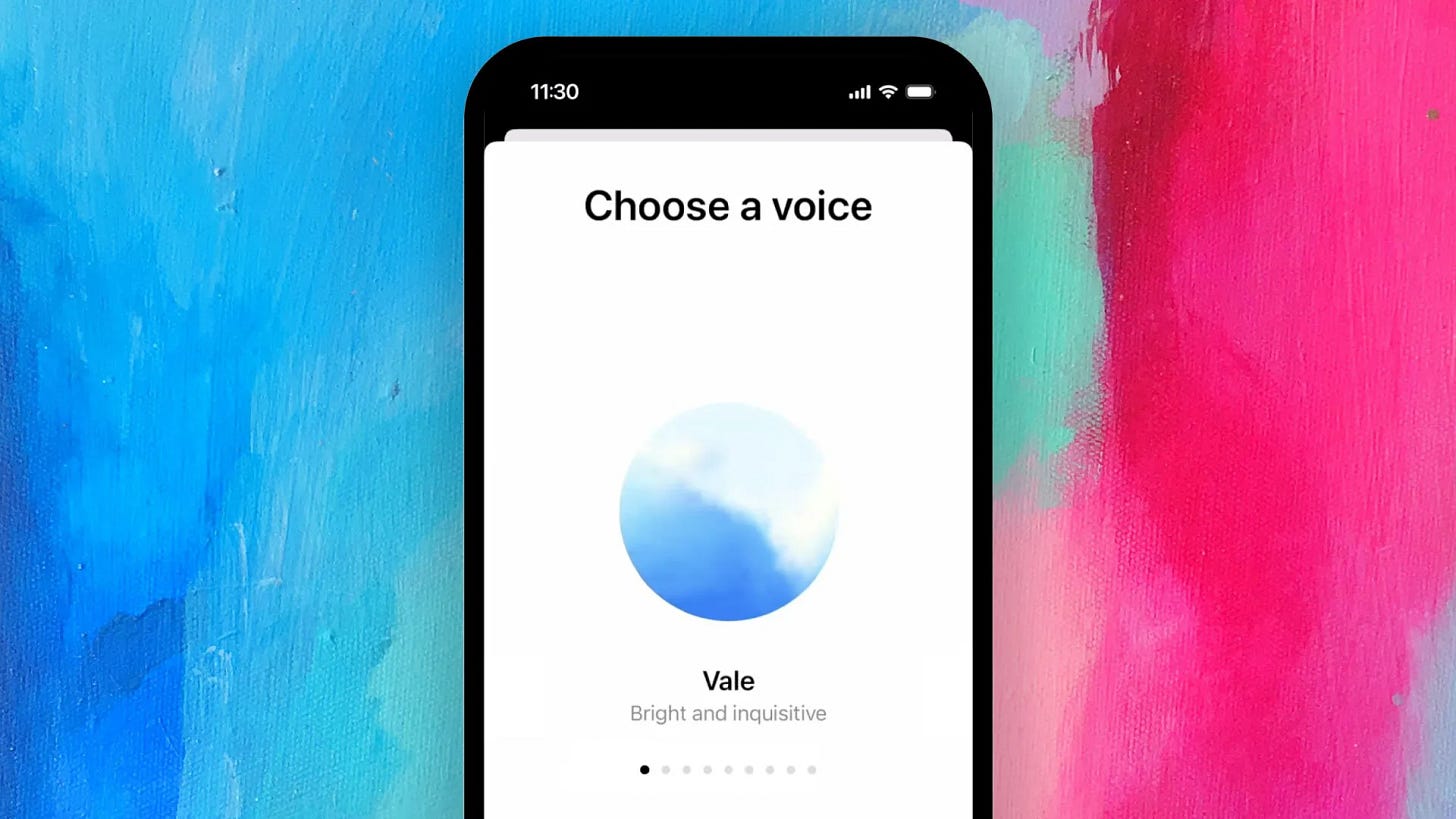

Say what you will about AI, but I think we can all be relieved that the era of extremely dumb voice assistants seems to be coming to an end thanks to LLMs. Anyone who has spent time with OpenAI’s Advanced Voice Mode or Gemini Live knows that we’ve entered a whole new era in terms of voice-based interaction with computers.

And with that comes a whole slew of new things to study and learn about how we interact with and feel about them. A new paper takes an interesting look at this by studying the preferences people have for the AI voice assistants they use. Among the findings (note that the sample was comprised exclusively of students at an American university):

Nearly half of participants (43%) took the time to switch the “embodied voice interface” (EVI) used in voice assistants

They generally preferred EVIs with American and British accents; British accents, however, were more trusted

“Participants preferred EVIs that matched their characteristics”

I didn’t pay for the whole paper, so I’m only seeing the abstract, but these seem to be in line with what one might expect. The theory of homophily famously suggests that birds of a feather tend to flock together. It stands to reason that people may exhibit a stated preference for a voice that sounds like someone like them in a voice assistant.

Beyond the observations about user preference with respect to EVIs, the paper also makes some interesting observations about trust. Seemingly independent of the EVI used, it notes:

Most trusted their VAs’ information accuracy because they used the internet to find information, reflecting inadequate mental models… A significant correlation was found between the participants’ perceived intelligence of their VAs and trust in information accuracy.

This called to mind a massive paper released last year from Google DeepMind on “The Ethics of Advanced AI Assistants.” The paper— a whopper at 200+ pages before its footnotes— explores a huge range of dimensions along which AI assistants should be viewed, built, and evaluated, with a whole section focused on human-assistant interaction where they explore topics like influence, trust, and anthropomorphism, where they observe:

When anthropomorphic features are embedded in conversational AI, its users demonstrate a tendency to develop trust in and attachment to AI (Skjuve et al., 2021; Xie and Pentina, 2022)

and:

Users who interact with DVAs with realistic voice production capabilities exhibit a concerning inclination to generalise purely human concepts to digital assistants (Abercrombie et al., 2021). When a DVA’s simulated voice mimics a ‘female’ tone, for example, people ascribe gendered stereotypes to their DVAs (Shiramizu et al., 2022; Tolmeijer et al., 2021)… This evidence suggests that, once initial impressions of human-likeness have been established, the process of anthropomorphism extends beyond context-specific instances and instead permeates broadly to evoke a wide range of human-like attributions.

We’ve already started down this path, where we are ascribing distinctly human traits and characteristics to different models. The added layer of a dynamic, customizable, and human-like voice with which to interact with them makes people even more prone to being influenced by them in ways that they don’t fully understand.

One of the lessons from this new paper and the existing body of research referenced by DeepMind is that interfaces matter. The voice your AI assistant uses will influence how trustworthy you think it is, and will therefore shape when and how you use it and how you feel about it. I suspect that the colors, font, and animation styles (and, as we’ve seen in other research, use of citations) of chatbots have similar effects.

The downstream implications of these hundreds of small design decisions are massive. People are already falling in love with chatbots— a phenomenon that will likely be set aflame as advanced voice models become more and more effective and common across apps and tools.

This is surely one of the most important aspects of AI that doesn’t get sufficient levels of attention in the current discourse. There seems to be a desire to laugh at or dismiss the idea of human-AI relationships, but I think it will not only be a wildly common phenomenon, but also one of the most important areas of study as we try to understand what our evolving relationship with technology looks like.

In the same way that people are infinitely inscrutable— where we can never fully disentangle the full picture of a person’s motivations, desires, and behaviors and how they influence the world around them in big and small ways— we will likely come to some sort of acceptance of AI being similarly inscrutable and mysterious. And that acceptance of a certain level of mystery may in itself may be the foundation for a whole new type of human relationship with technology.