📝 Research summary: How do Americans feel about AI? It's complicated.

New data from YouGov paints a classic picture of optimism bias in the wild

It’s an interesting thing to be living through a time when we’re all learning about and coming to terms with a profound new technology together. It takes time for attitudes to consolidate around some sort of shared consensus about each new medium, gadget, or capability that emerges. YouGov recently shared new survey data that shows we’re likely still in the messy middle of that process with AI.

YouGov’s own writeup of the findings paints a fairly negative view on how Americans feel about artificial intelligence. And there is definitely evidence in the data to support that framing.

About one-third (36%) of Americans are very or somewhat concerned that artificial intelligence will cause the end of the human race on earth — fewer than in April 2023. Among people who know a great deal about AI, 49% are concerned about this.

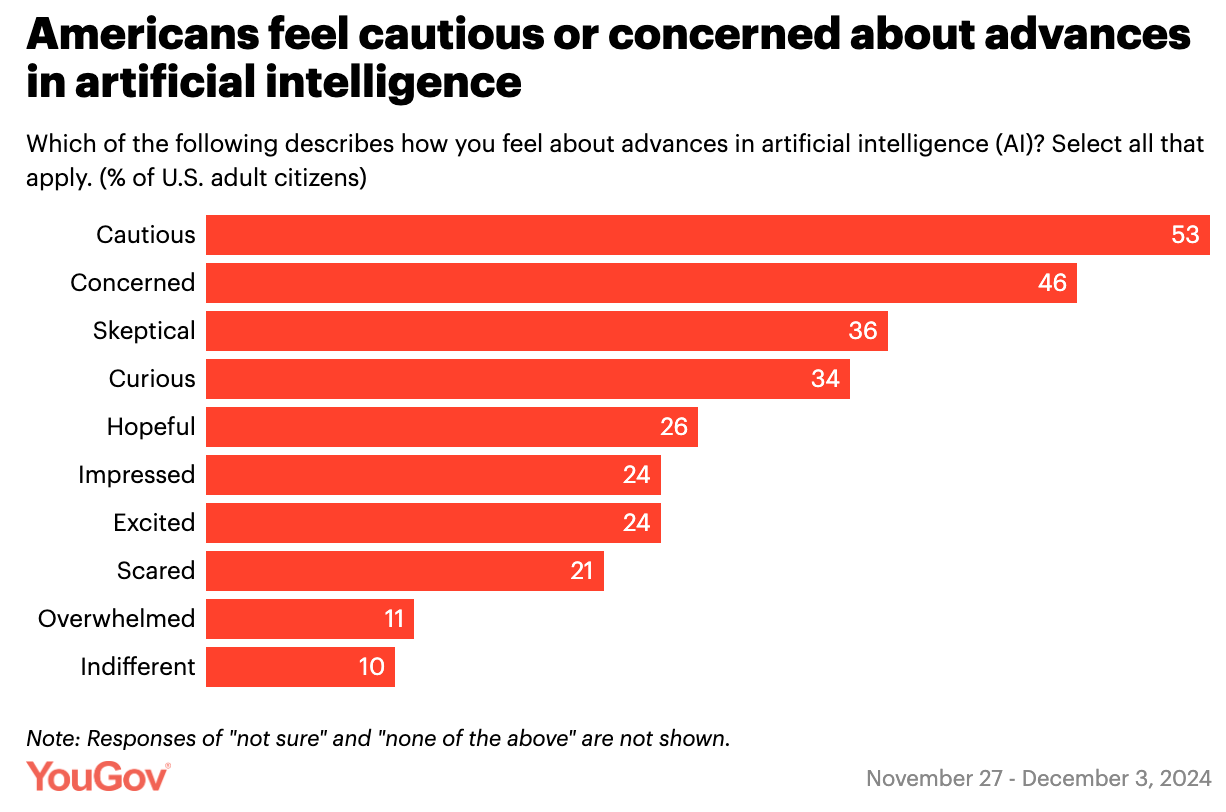

As far as concerns about the implications of a new technology go, that one seems… nontrivial. There are other red flags, too. Among a balanced set of words describing how one could feel about AI, “cautious” and “concerned” topped the list.

And there’s data about how many Americans trust AI to be ethical (only 27%), unbiased (only 34%), and truthful (an interesting 49%).

But looking at the topline data and crosstabs (which I encourage you to do) reveals a more interesting and nuanced reality, like the fact that there’s a nearly perfect split between the percentage of Americans who think AI’s effects on society will be positive (35%) and those who think they’ll be negative (34%).

And zooming into the effects that the technology will have on their own lives, people’s attitudes actually skew more optimistic. A third of Americans believe AI will have a positive effect on their life, compared to 21% who believe it will have a negative effect.

That optimism seems to be at odds with other results from the survey. Attitudes about the perceived risk of job loss, ethical behavior of AI companies, and the government’s ability to meaningfully regulate the space all show much higher measures of skepticism.

You could make an argument that this is a pretty clear-cut case of optimism bias. People tend to exhibit some measure of dissonance between the risks of an event at large and the risks of that event affecting them. Sure, bad things happen, but I don’t think bad things will happen to me.

It’s a revealing truth about where we are in our relationship with AI, and one that resonates with my personal feelings. I can (and do) intellectually recognize the risks of AI’s capabilities at both the micro and macro levels, yet I still get excited when I see a new way to incorporate them into my life or work. Snapshots like this one are a good reminder of that reality, and a good reminder to pump the brakes a bit before we find ourselves getting out over our skis.

I would say that AI is here to stay, and the next 5 years would be about deploying it in a trust-worthy manner.